At its Google Next ’23 event this week, Google revealed how — with the use of its PaLM 2 foundational model — it is applying the generative AI Duet AI to security solutions in Google Cloud, including posture management, threat intelligence and detection and network and data security.

SEE: Google AI in Workspace: Zero-Trust and Digital Sovereignty (TechRepublic)

As Sunil Potti, vice president and general manager of security at Google Cloud, explained during a pre-event press briefing last week, the company is using the Duet AI model in three areas:

“We have been working in (these) three areas where generative AI can bring real value to security,” said Potti at the press conference.

Jump to:

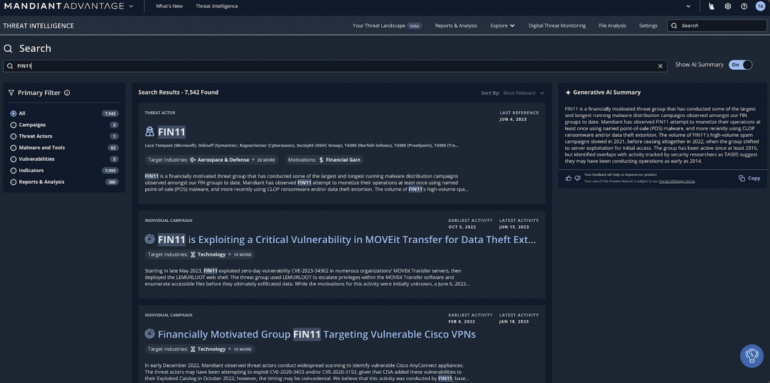

Potti explained that Google will augment its Mandiant threat intelligence unit, which it acquired in 2022, with Duet AI to accelerate detection of novel threats and improve visibility across a range of vulnerabilities, including in code. It will also translate Mandiant insights into tactics, techniques and procedures used by threat actors with summaries of threat intelligence in a natural language and easy to comprehend format (Figure A).

Figure A

Integrating Duet AI into Chronicle explicitly addresses security operations workload and tool proliferation, and implicitly the shortage of security operators in SOC teams, Potti explained.

“I’ve never met a CISO who said they have enough talent or people on their team. Generative AI presents a lot of opportunities to scale talent so level one operations can be as productive as level two,” he said.

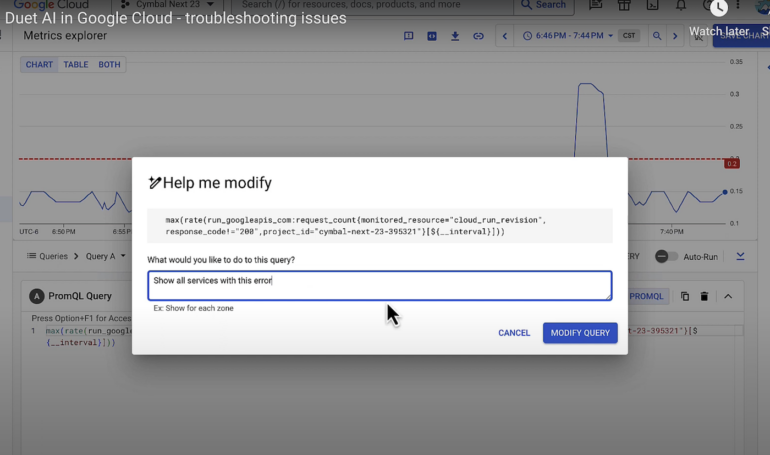

Google allows analysts to do things like make natural language queries. “When I spoke of upleveling talent in security, this is a great example. You don’t have to be conversant in our unified data model syntax; instead, you can ask questions in natural language,” Potti said (Figure B).

Figure B

According to Potti, Mandiant generates vast amounts of data around indicators of compromise, which can be summarized using Duet AI. “This allows us to easily use Duet AI to look at thousands of intel reports, summarize that data for what is most specific to a user or circumstance and customize it to the type of audience receiving the report.”

The infusion of Duet AI into Chronicle will allow security administrators to generate summaries of all aspects of a security case, according to Potti, who said the AI-driven Chronicle platform will recommend next steps for defense.

SEE: Google Cloud Study: Big Risk in Proliferating Credentials (TechRepublic)

Potti said that as part of its SOC team services, Google is also integrating Duet AI into its Security Command Center in order to provide visibility into customer vulnerabilities in Google Cloud and perform automated tasks. For example, it can determine if assets are vulnerable to attack, generate a summary of what resources can be exploited and provide suggestions on how to remediate the vulnerabilities.

He said the innovations extend a new capability for Terminal Access Controller Access-Control System simulation, which can look across a user’s enterprise Google Cloud environment to identify which assets have vulnerabilities, threats, or were compromised. It also looks for the potential exposure of an organization’s privileged data, or a threat actor’s ability to escalate privileges.

“Through Duet AI and our Security Command Center, we are helping to summarize those attack paths so security teams can quickly understand what these paths are and recommended steps to remediate some of those issues. These are improvements that help reduce toil security teams face every day,” he said.

Also at Google Next ’23, the company announced Mandiant Hunt for Chronicle. The new feature uses Mandiant personnel to do threat hunting on top of Chronicle environments in order to find threats that a security operations team may have missed.

According to Google, Mandiant experts build hypotheses using a robust and adaptable collection and analysis strategy alongside traditional automated hunting that searches for indicators of compromise.

SEE: Mandiant sees malware proliferating, but detection measures bear fruit (TechRepublic)

“Think of this as a way to augment the customer security team today with the best incident response investigators in the world,” said Potti. “Because Chronicle brings in data from so many sources, we are able to leverage not only endpoint data but network and identity data to run these queries.”

According to Potti, in order to tune Duet AI for security functions, Google used its Vertex AI PaLM 2. Google added that PaLM 2 vastly improves on the first generation PaLM’s advanced reasoning abilities, including code and math, classification and question answering, translation and multilingual proficiency, and natural language generation.

Potti said Google trained PaLM 2 on security data from its Mandiant threat intelligence unit to create a generative AI model it calls Sec-PaLM 2, which is designed to be optimized for supporting security work cases. He noted its plug-in architecture means Google Cloud customers can customize it easily. “It is powering innovations and enabling customers and partners to use it as a model within the Vertex AI garden,” he said.

Google’s move mirrors a rapidly escalating arms race between threat actors and defenders around the application of generative AI and other machine learning tools. Attackers are using these new technologies to write malware, impersonate brands and conduct an array of social engineering exploits.

Check Point Software has been leveraging AI for about a decade, and approximately 40 out of its 70 engines use AI and machine learning. Pete Nicoletti, global chief information security officer at Check Point Software, said AI is mandatory at this point.

“These days, if you don’t have AI to battle AI, you are going to be a statistic,” he said. “It’s lowering the bar for attackers.” He noted that hackers are using AI in two ways — the first being code generation. “They are beating the guardrails of ChatGPT systems and having them create snippets of code rather than full-blown zero day ransomware,” he said. The second is the automated creation of spam — that is, taking hacked content and creating new social engineering exploits. “Between the scripting capabilities of AI and content creation, you can do it in minutes and launch it in seconds.”