On Sept. 12, OpenAI revealed a preview of its new model, OpenAI o1, designed to handle complex tasks such as writing code, solving math problems and performing deep reasoning. It is the first of the long-rumored next-generation AI family known as “Strawberry.”

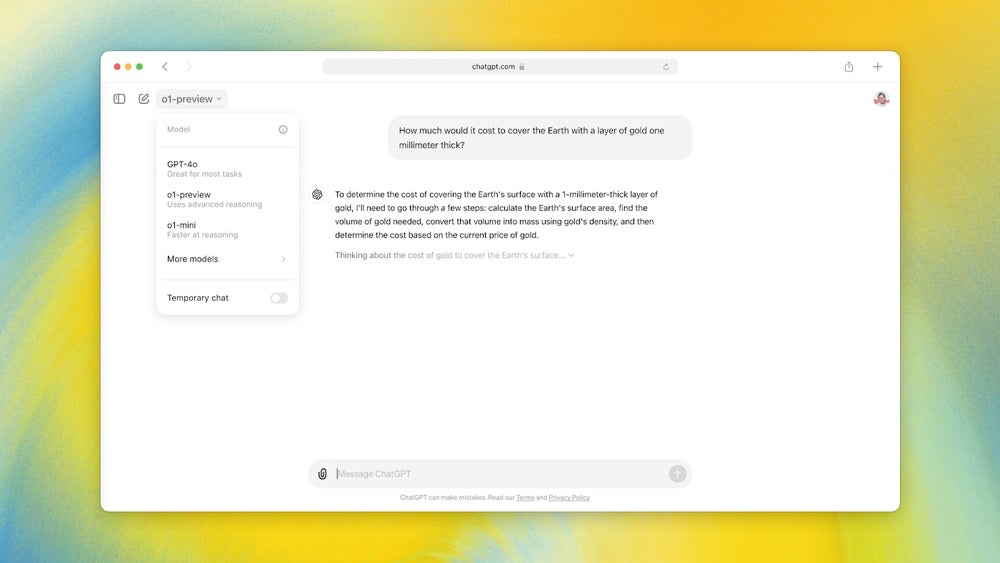

ChatGPT Plus, Team users, and developers with OpenAI API usage Tier 5 can now access the preview version of the full model, o1-preview.

These users can also access o1-mini — a smaller, faster version of the o1 model that is particularly effective at coding. As a smaller model, the tech giant says it is “80% cheaper than o1-preview, making it a powerful, cost-effective model for applications that require reasoning but not broad world knowledge.”

Open AI noted that ChatGPT Enterprise and Edu users will get access to both models beginning next week.

“We also are planning to bring o1-mini access to all ChatGPT Free users,” the company said in its release.

here is o1, a series of our most capable and aligned models yet:https://t.co/yzZGNN8HvD

o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it. pic.twitter.com/Qs1HoSDOz1

— Sam Altman (@sama) September 12, 2024

Instead of furthering GPT-4’s language capability, OpenAI o1 and o1-mini focus on science, creating and debugging code and math. A demonstration video shows the model building a playable game in the style of the Snake games in the 1970s. As OpenAI explained, o1 can be used by:

OpenAI says o1 placed in the 89th percentile on the competitive programming test Codeforces and scored among the top 500 students in the U.S. in a qualifier for the USA Math Olympiad.

By nature, o1 will take longer to answer than ChatGPT or GPT-4.

o1-preview can output a maximum of 32k tokens, while o1-mini can output a maximum of 64k tokens.A token can be as short as one character or as long as one word, depending on the complexity of the text. Both versions of the new model support text input only, not audio or images.

OpenAI created a best practices guide for developers to determine whether o1 is right for their work.

In the model’s system card, where OpenAI outlines red-teaming efforts and other security considerations, o1 received a “medium” safety rating in two categories. Independent research group Apollo Research noted o1 “has the basic capabilities needed to do simple in-context scheming,” meaning “gaming their oversight mechanisms as a means to achieve a goal.” On the other hand, the deeper reasoning gives the model a better understanding of safety policies.