Data cloud provider Snowflake has launched an open source large language model, Arctic LLM, as part of a growing portfolio of AI offerings helping enterprises leverage their data. Typical use cases include data analysis, including sentiment analysis of reviews, chatbots for customer service or sales, and business intelligence queries, like the extraction of revenue information.

Snowflake’s Arctic is being offered alongside other LLM models from Meta, Mistral AI, Google and Reka in its Cortex product, which is only available in select regions. Snowflake said Cortex will be available in APAC in Japan in June via the AWS Asia Pacific (Tokyo) region. The offering is expected to roll out to customers around the world and the rest of APAC over time.

Arctic will also be available via hyperscaler Amazon Web Services, as well as other model gardens and catalogs used by enterprises, which include Hugging Face, Lamini, Microsoft Azure, NVIDIA API catalog, Perplexity, Together AI and others, according to the company.

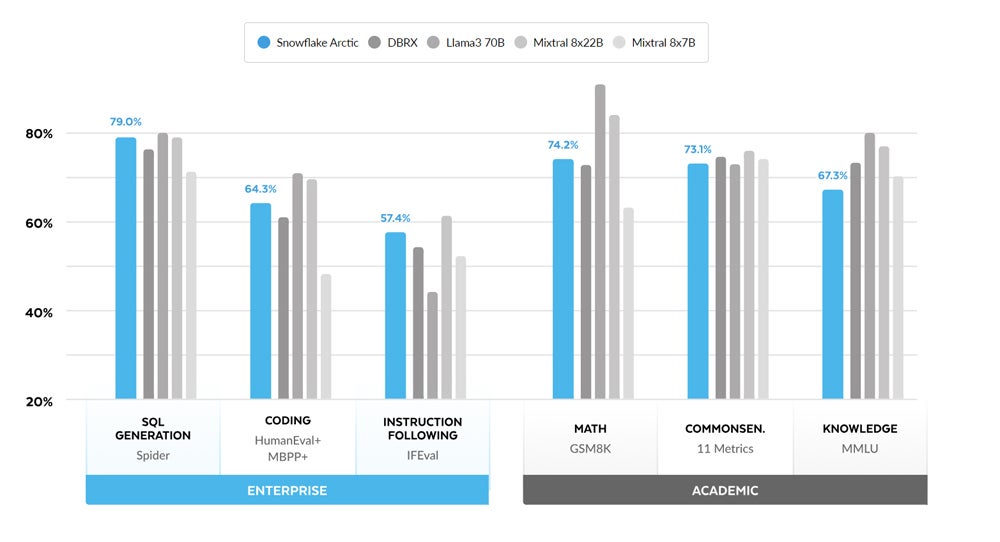

Arctic is Snowflake’s new “state-of the art” LLM, launched in April 2024, designed primarily for enterprise use cases. The firm has shared data showing that Arctic scores well against other LLMs on several benchmarks, including SQL code generation and instruction following.

Snowflake’s Head of AI Baris Gultekin said the LLM took three months to build — an eighth of the time of some other models — on a budget of $2 million. This achievement sees the model push the envelope for how quickly and cheaply an enterprise-grade LLM can be developed.

Arctic LLM’s aims to provide “efficient intelligence”; it excels at common enterprise tasks, while being cheaper to use when training custom AI models on enterprise data. It is also pushing the open source envelope, having been released on an open source Apache 2.0 licence.

Rather than taking the general-purpose world understanding offered by many other open source LLMs, which include Meta’s Llama models, the Arctic AI model is aiming to specifically meet enterprise demand for “conversational SQL data copilots, code pilots and RAG chatbots.”

SEE: Zetaris on federated data lakes and the enterprise data mess

Snowflake created its own “enterprise intelligence” metric to measure the LLM’s performance, which was a combination of coding, SQL generation and instruction following capabilities.

Arctic came out favorably against models from Databricks, Meta and Mistral on common AI model benchmarking tests, which challenge and provide a percentage score for LLM models in specific domains of capability. According to Snowflake, the model’s ability to excel in enterprise intelligence when measured against LLMs with higher budgets was notable.

Gultekin said the Arctic AI LLM offers enterprise customers a way to train custom LLMs using their own data in a more cost-effective way. The model is also tailored for efficient inferencing to make enterprise deployments lower cost and more practical.

Snowflake making the Arctic LLM open source with an Apache 2.0 licence is thanks in part to what Gultekin said is the AI team’s deep background in open source. This is seeing the firm provide access to weights and code, as well as data recipes and research insights.

Snowflake believes the industry and product itself will be able to move forward faster through genuine open source developer contributions, while Gultekin said that being able to see under the hood would help enterprise customers trust the model more.

Snowflake’s Arctic release caused a splash in the enterprise data and tech community, thanks to its speed and efficiency and SQL generation capabilities. Gultekin said the firm’s decision to “push the envelope on open source” has created excitement from the research community.

SEE: Our comparison of Snowflake with Azure Synapse Analytics

“This is our first release, and it sets a really good benchmark. The market is going to evolve such that there will not be a single winner; instead, all customers are very interested in choice in the market. We have already seen a tonne of usage, and we expect that to continue,” he said.

Snowflake previously offered a series of machine learning solutions. As part of the generative AI boom in 2023, it acquired a number of AI organisations, including data search company Neeva and NXYZ, a company where Gultekin was the chief executive officers and co-founder. Since then, Snowflake has built out its core generative AI platform, AI search capabilities and is now adding LLM models.