NVIDIA has come a long way from the days of specializing in graphics cards for gaming — NVIDIA GPUs now provide a lot of the power behind generative AI for enterprise. At NVIDIA GTC 2024, held March 18 – 21 in San Jose, California, generative AI was everywhere, from chatbots to art installations. Here are some of the top tech trends we saw while at NVIDIA GTC this year, meaning the tech that came up over and over again in presentation topics, the keynote and press Q&A with NVIDIA CEO Jensen Huang, and on the show floor.

Billed as a technique for cutting down on AI “hallucinations” or inaccuracies, retrieval-augmented generation lets a generative AI model check its work against external resources such as research papers or articles. RAG appeals to enterprise customers because it increases the reliability of generated content.

SEE: NVIDIA CEO Jensen Huang revealed the upcoming Blackwell-architecture GPUs and more during the conference keynote. (TechRepublic)

For example, Lenovo is an early adopter of NVIDIA’s newly announced NeMo framework with RAG, which Lenovo is using to build out its AI ecosystem for customers who work on Lenovo devices.

Many organizations at NVIDIA GTC positioned themselves as “AI factories,” which give enterprises access to the storage and compute power they need to make private AI.

NexGen Cloud, which calls its AI factory service “GPUaaS,” is among the companies that will provide access to NVIDIA’s 10-trillion parameter Blackwell GPU (Figure A) later this year.

Ten trillion parameter jobs require a lot of compute, and organizations are betting they can make a business model out of providing just the right amount of that computing power to customers.

“As those models get bigger and bigger, continuing to grow exponentially, the infrastructure that’s required to train, fine-tune and serve or provide inference for those at scale also needs to continue to grow to solve that problem,” said Mark Lohmeyer, vice president and general manager of compute and AI/ML infrastructure at Google Cloud, in an interview with TechRepublic at NVIDIA GTC 2024.

Storage needs to support highly performant structured data as well as unstructured data such as documents, images and video, said Greg Findlen, senior vice president of product management of data management at Dell, at a pre-briefing on March 15. Customers also want to be able to manage how their processes are utilizing the available hardware. “Nobody wants to have idle GPUs,” Findlen said.

The Dell AI Factory, developed with help from and support for NVIDIA products, is meant to narrow down “vast possibilities” into “impactful use cases,” said Varun Chhabra, Dell senior vice president of infrastructure and telecom marketing, at the pre-briefing.

According to a Gartner study published in March 2024, 83% of 459 technology service providers polled from October to December 2023 had deployed or were piloting generative AI within their organizations.

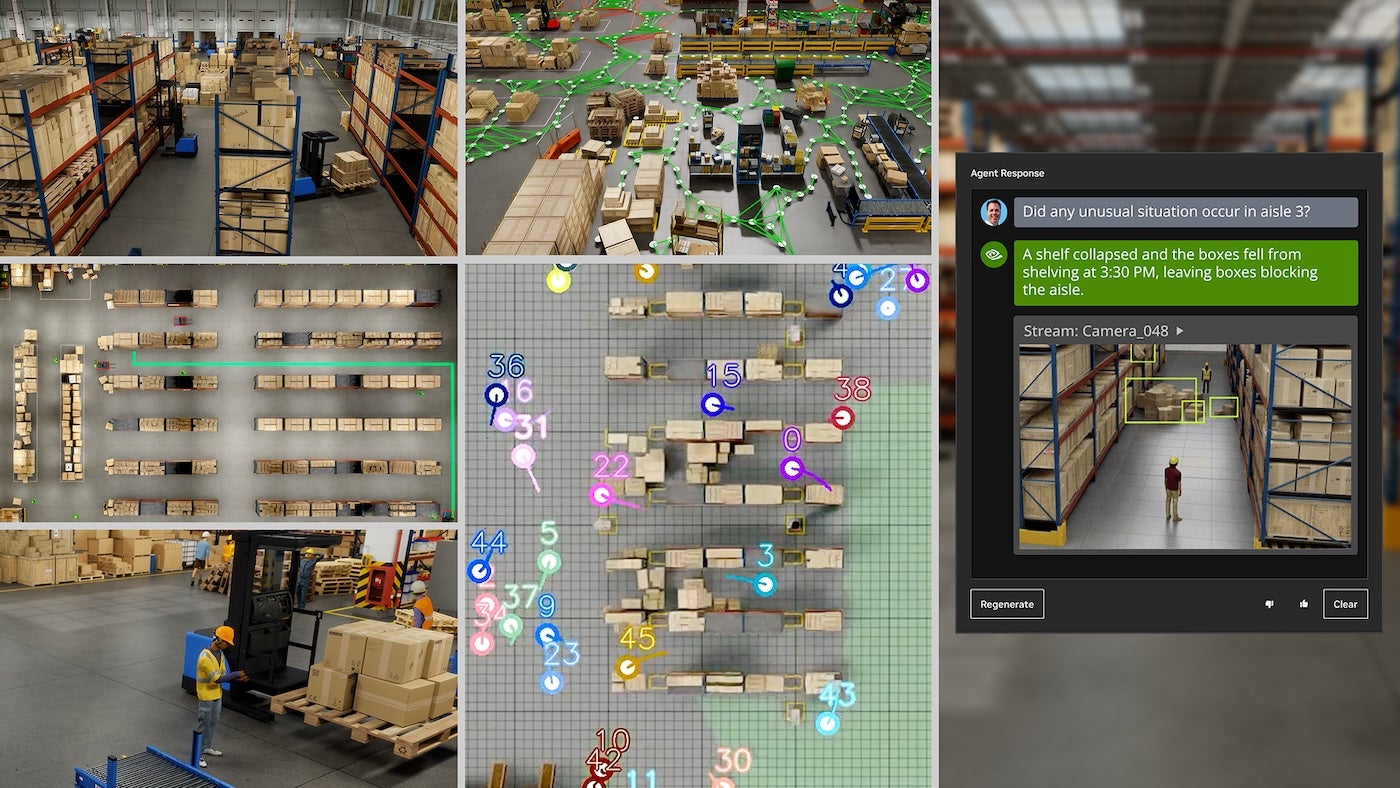

Organizations focusing on edge AI took up a large portion of the show floor at NVIDIA GTC 2024, with a wide variety of use cases: robotics, automotive, industrial, warehousing, healthcare, critical systems and retail.

Many of these edge AI use cases were powered by NVIDIA’s Jetson platform for robotics. NVIDIA Metropolis microservices on Jetson Orin lets developers use API calls to set up generative AI capabilities on the edge, making robots more reactive and flexible to their environments.

For example, during the keynote, NVIDIA CEO Jensen Huang showed a demonstration of warehouse robots that automatically rerouted around an obstacle (Figure B).

Organizations are working on spinning up private generative AI that can access proprietary data securely while providing the flexibility of a public AI like ChatGPT.

A common name on the show floor for private AI services was Mistral AI, which provides an open source large language model that customers can host on their own servers.

Copilots aren’t new — chatbots like ChatGPT set off the generative AI boom, after all. Since then, “copilot” has become almost a generic term for a chatbot that can answer questions about data.

NVIDIA GTC saw a wide range of copilot AI that can draw answers from specific, company-owned structured and unstructured data. For example, the SoftServe Gen AI Industrial Copilot reads from a robot arm’s maintenance manual to create step-by-step instructions for making repairs and can highlight the parts the technician needs to replace on a 3D model.

Another common trend in enterprise copilots was citation. NexGen Cloud showed how its Hyperstack cloud platform (developed by SoftServe and accelerated by NVIDIA GPUs) could run a copilot that could answer questions based on a video and point back to specific moments in the video transcript where the AI sourced its answers. The combination of proprietary, private data sources with copilot-style chatbot functionality continues to be the driving trend in generative AI for enterprise.

Disclaimer: NVIDIA paid for my airfare, accommodations and some meals for the NVIDIA GTC event held March 18 – 21 in San Jose, California.