Open directories are a severe security threat to organizations as they might leak sensitive data, intellectual property or technical data that could allow an attacker to compromise the entire system. According to new research from Censys, an internet intelligence platform, more than 2,000 TB of unprotected data, including full databases and documents, are currently accessible in open directories around the world.

Jump to:

Open directories are folders that are accessible directly via a browser and made available by the web server. This happens when a web server has been configured to provide a directory listing when no index file is found in the specified folder. Depending on the web server’s configuration, a user may or may not be allowed to see the folder’s content. According to Censys, the default behavior for most web servers is not to render the directory listing.

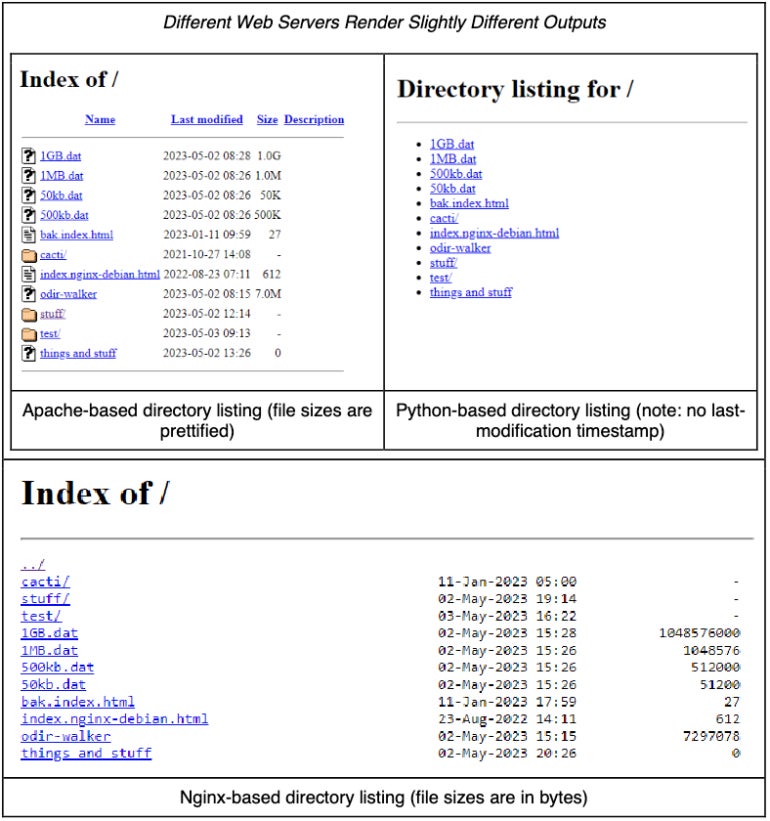

Open directories appear with a few differences depending on their web server (Figure A).

Figure A

Open directories can be found via Google Dorks, which are queries that can be used on the Google search engine to find specific content, such as open directories. A similar search can also be done via Censys.

Why don’t search engines prohibit people from seeing those open directories? Censys researchers told TechRepublic that “while this may initially sound like a reasonable approach, it’s a bandage on the underlying issue of open directories being exposed on the internet in the first place. Just because a search engine doesn’t display the results doesn’t mean nefarious actors wouldn’t be able to find them, but it could make it harder for defenders to easily find and remediate these instances. This also assumes that all open directories are ‘bad.’ While many of them are likely unintentionally exposed, it doesn’t mean they all are.”

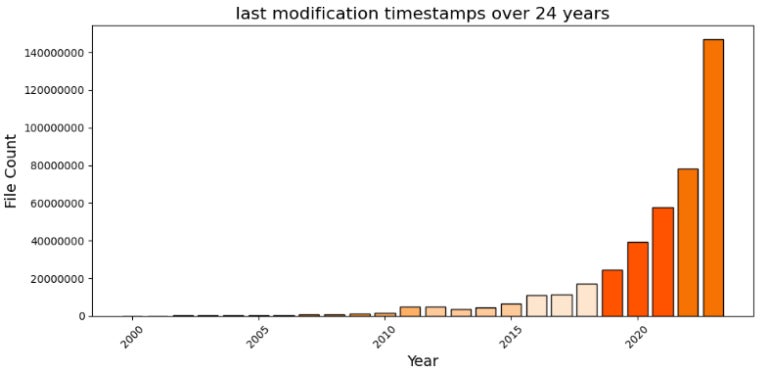

Censys found 313,750 different hosts with a total of 477,330,039 files stored in those open directories. Analyzing the last modification timestamp of those files, the vast majority of files were created or modified in 2023 (Figure B).

Figure B

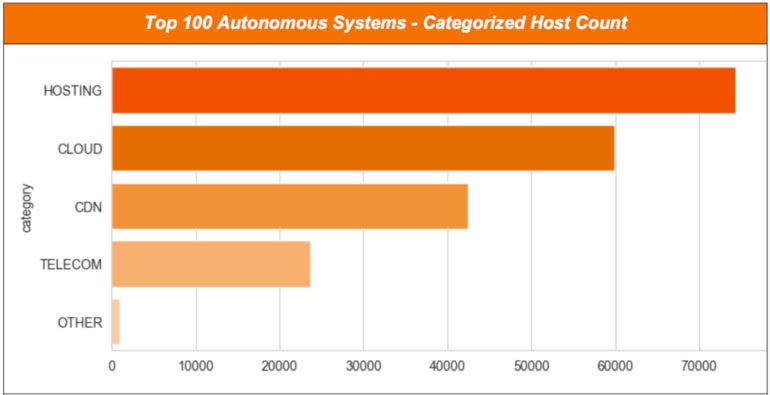

Regarding the hosting of those open directories at the Autonomous Systems level, Censys has split the top 100 AS into four categories to get a better idea of what hosting services are the most used : hosting, cloud, content delivery networks and telecom.

Hosting: Most data is hosted by companies that provide basic managed and unmanaged hosting services, such as virtual hosting, shared hosting, virtual private servers and dedicated servers, for individuals and small to medium-sized organizations.

Cloud providers follow with the difference being that they offer many ways to store and access data compared to usual hosting.

CDNs such as Akamai or Cloudflare are third (Figure C), before telecoms, which embed more individuals than organizations as compared to the other categories.

Figure C

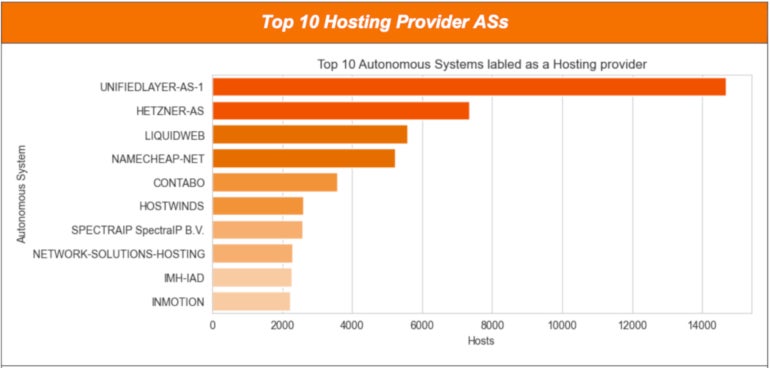

For the hosting category, the biggest number of exposed open directories is located at UnifiedLayer-AS-1, with more than 14,000 unique hosts containing open directories. Second is Hetzner-AS, with more than 7,000 hosts, followed by Liquid Web, with approximately 5,500 hosts (Figure D).

Figure D

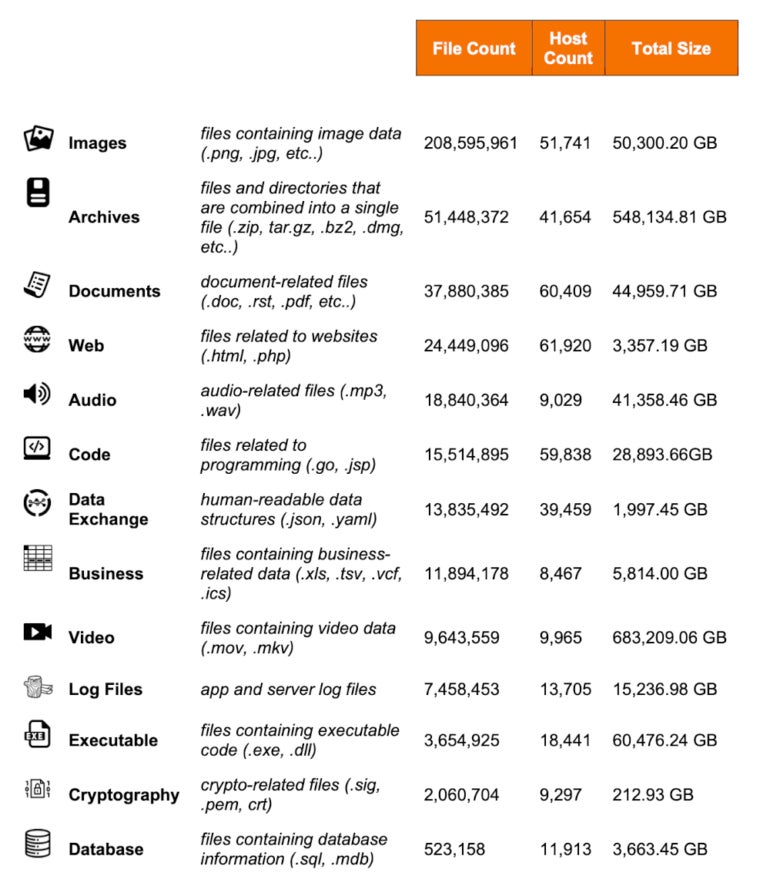

Censys categorized the files stored in these open directories based on the file extensions (Figure E).

Figure E

Log files are particularly interesting for an attacker because those files might contain sensitive information regarding the hosting infrastructure and the way it is accessed. Application debug logs in particular could provide a lot of useful information on the environment, while access logs could contain IP addresses. An attacker could exploit all this information to run targeted attacks by finding exploitable vulnerabilities or finding insights between applications and users connecting to them.

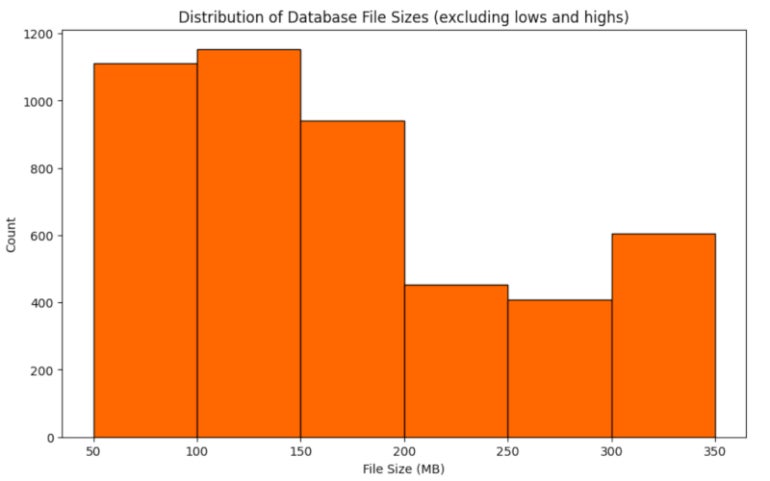

Databases are also very sensitive because they might contain Personal Identifying Information, trade secrets, intellectual property and technical information about the organization or its infrastructure. A total of 1,154 database files within the size range of 100-150 MB have been discovered in the open directories; 605 database files were between 300 and 350 MB (Figure F).

Figure F

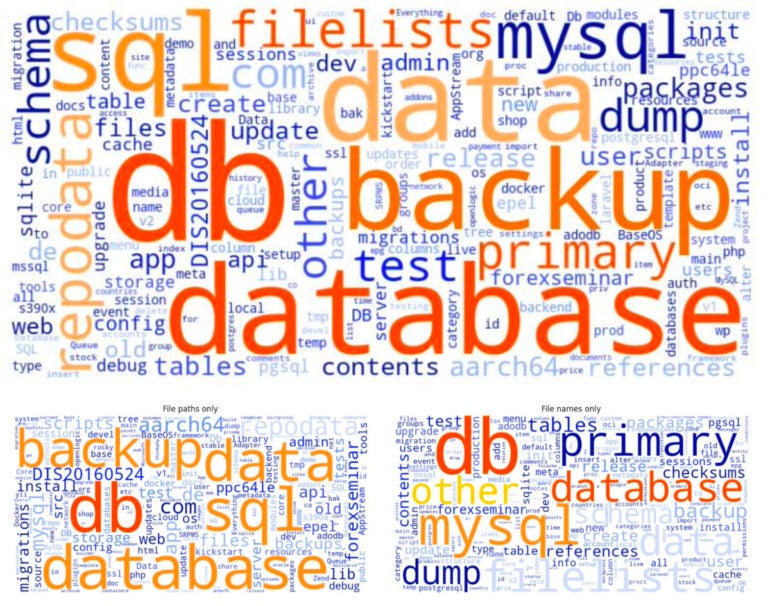

Censys did not view the content of those database files, but the researchers did look at the frequency of words within the file paths and file names (Figure G).

Figure G

The 713 occurrences of the word backup indicate files that are part of a database backup, while 334 occurrences of the word dump indicate full copies of databases. Other words used in database file paths and names also indicate potentially sensitive information being shared (Figure H).

Figure H

Censys found that 43,533 database files contained a development-related word (dev, test, staging), and 25,427 database files contained a production-related word (prod, live,p rd); this is a potential goldmine of database-related information that attackers could use to exploit vulnerabilities, weaknesses or compromise sensitive information.

Other words might indicate less severe issues, such as “schema” which might indicate a database schema rather than full content,”aarch64/ppc641e/EPEL” which might be databases distributed with open-source software and “references” which is probably test data.

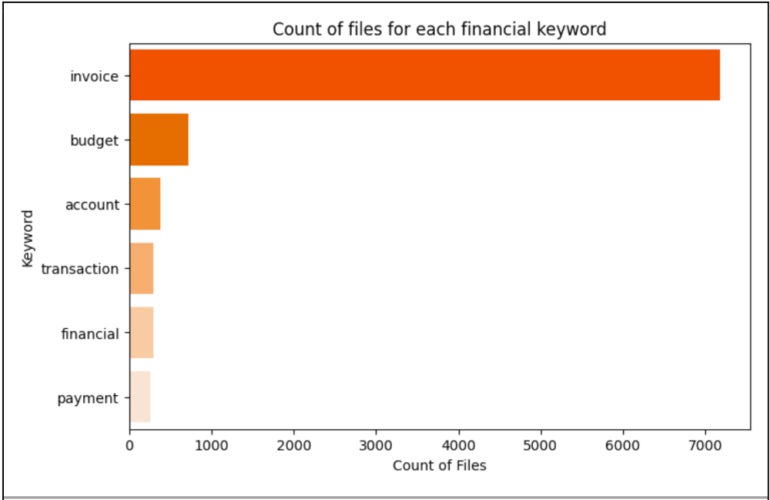

Aside from database files, spreadsheets might also reveal sensitive information. Over 370 GB of spreadsheet files are exposed, some of which have sensitive words in their filename such as invoice, budget, account, transaction, financial or payment (Figure I).

Figure I

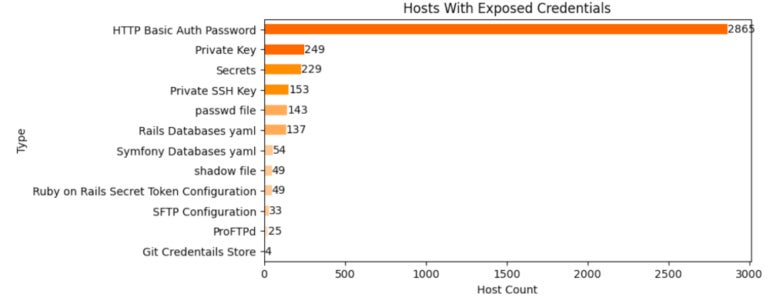

Potentially exposed credentials can also be found in open directories in a variety of files (Figure J).

Figure J

HTTP Basic Auth Password, known as .htpasswd, are text-based configuration files that might contain credentials. Although the passwords in these files are not stored in plain text, they still might be cracked through brute-force techniques. Other files containing passwords or authentication methods include SSH private keys, applications credentials and Unix password files.

Other file types might also represent threats to the organizations exposing them. For instance, archives and emails might leak internal, sensitive or confidential information; sensitive code or configuration files might also leak that information and could be exploited by attackers to find more vulnerabilities.

As most major web servers do not enable directory listing by default when trying to browse a folder that does not contain an index file, several hypotheses might explain why so many open directories are available online.

We asked Censys researchers if it is possible cybercriminals would create such open directories to infect visitors with malware, they answered, “It’s possible, but there are far more effective malware delivery mechanisms than hoping someone will browse to an open directory and download a file. In cases where malware is hosted in open directories, it’s more likely that the files are remotely downloaded to another host by a threat actor once they gain access to said other host.”

Organizations should constantly monitor their infrastructure for any open directory. Sharing files via open directories is a bad IT practice that should stop. File transfers should always be done via other methods or protocols, such as SFTP or via secure internal or external storage. When possible, multifactor authentication should be deployed to protect those folders.

Some open directories are made available on purpose, while others result from mistakes. Organizations are not the only entities to expose data this way — individuals also do and might not know how to secure a web server. It is difficult to report open directories to those individuals because they often neglect to provide a way to report security issues on their website, which has often been created using generic services that do not take security into serious consideration. In comparison, large organizations often have a proper security.txt file at their root folder or a security contact easily reachable on sites like LinkedIn, for example.

Disclosure: I work for Trend Micro, but the views expressed in this article are mine.